by Tomasz Bak and Fabio Kung

Titus is the Netflix cloud container runtime that runs and manages containers at scale. Within the time because it was first offered as a sophisticated Mesos framework, Titus has transparently developed from being constructed on high of Mesos to Kubernetes, dealing with an ever-increasing quantity of containers. Because the variety of Titus customers elevated over time, the load and stress on the system elevated considerably. The unique assumptions and architectural selections have been now not viable. This weblog submit presents how our present iteration of Titus offers with excessive API name volumes by scaling out horizontally.

We introduce a caching mechanism within the API gateway layer, permitting us to dump processing from singleton chief elected controllers with out giving up strict information consistency and ensures shoppers observe. Titus API shoppers at all times see the newest (not stale) model of the info no matter which gateway node serves their request, and through which order.

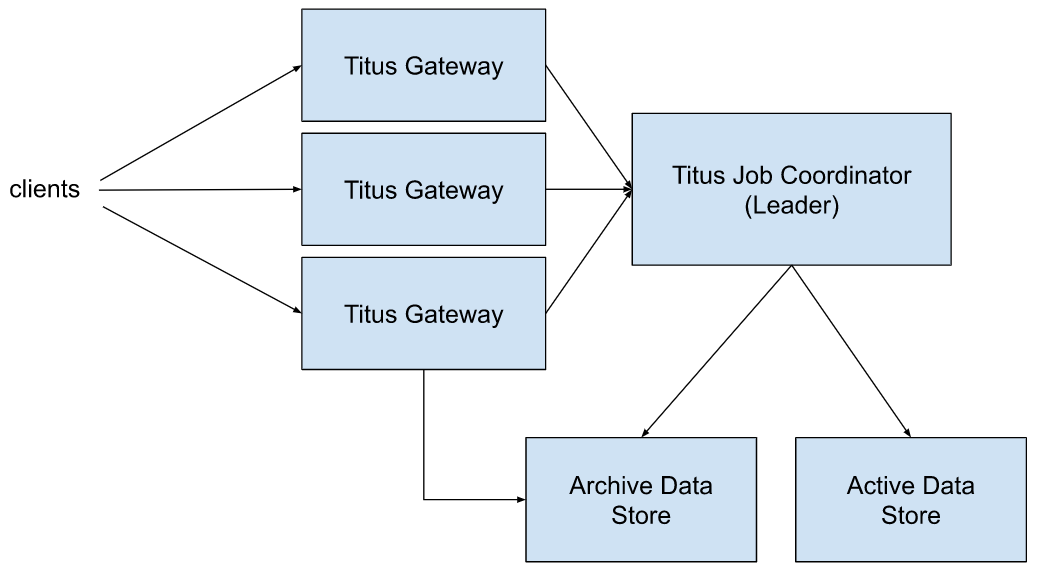

The determine under depicts a simplified high-level structure of a single Titus cluster (a.okay.a cell):

Titus Job Coordinator is a frontrunner elected course of managing the lively state of the system. Energetic information contains jobs and duties which can be presently operating. When a brand new chief is elected it hundreds all information from exterior storage. Mutations are first persevered to the lively information retailer earlier than in-memory state is modified. Information for accomplished jobs and duties is moved to the archive retailer first, and solely then faraway from the lively information retailer and from the chief reminiscence.

Titus Gateway handles person requests. A person request may very well be a job creation request, a question to the lively information retailer, or a question to the archive retailer (the latter dealt with immediately in Titus Gateway). Requests are load balanced throughout all Titus Gateway nodes. All reads are constant, so it doesn’t matter which Titus Gateway occasion is serving a question. For instance, it’s OK to ship writes by means of one occasion, and do reads from one other one with full information learn consistency ensures. Titus Gateways at all times connect with the present Titus Job Coordinator chief. Throughout chief failovers, all writes and reads of the lively information are rejected till a connection to the lively chief is re-established.

Within the authentic model of the system, all queries to the lively information set have been forwarded to a singleton Titus Job Coordinator. The freshest information is served to all requests, and shoppers by no means observe read-your-write or monotonic-read consistency issues¹:

Information consistency on the Titus API is extremely fascinating because it simplifies consumer implementation. Causal consistency, which incorporates read-your-writes and monotonic-reads, frees shoppers from implementing client-side synchronization mechanisms. In PACELC phrases we select PC/EC and have the identical stage of availability for writes of our earlier system whereas enhancing our theoretical availability for reads.

For instance, a batch workflow orchestration system could create a number of jobs that are a part of a single workflow execution. After the roles are created, it displays their execution progress. If the system creates a brand new job, adopted instantly by a question to get its standing, and there’s a information propagation lag, it’d determine that the job was misplaced and a alternative have to be created. In that state of affairs, the system would wish to take care of the info propagation latency immediately, for instance, by use of timeouts or client-originated replace monitoring mechanisms. As Titus API reads are at all times persistently reflecting the up-to-date state, such workarounds usually are not wanted.

With site visitors progress, a single chief node dealing with all request quantity began turning into overloaded. We began seeing elevated response latencies and chief servers operating at dangerously excessive utilization. To mitigate this difficulty we determined to deal with all question requests immediately from Titus Gateway nodes however nonetheless protect the unique consistency ensures:

The state from Titus Job Coordinator is replicated over a persistent stream connection, with low occasion propagation latencies. A brand new wire protocol supplied by Titus Job Coordinator permits monitoring of the cache consistency stage and ensures that shoppers at all times obtain the newest information model. The cache is stored in sync with the present chief course of. When there’s a failover (due to node failures with the present chief or a system improve), a brand new snapshot from the freshly elected chief is loaded, changing the earlier cache state. Titus Gateways dealing with consumer requests can now be horizontally scaled out. The small print and workings of those mechanisms are the first subjects of this weblog submit.

It’s a simple reply for techniques that have been constructed from the start with a constant information versioning scheme and might rely upon shoppers to observe the established protocol. Kubernetes is an efficient instance right here. Every object and every assortment learn from the Kubernetes cluster has a singular revision which is a monotonically rising quantity. A person could request all modifications because the final obtained revision. For extra particulars, see Kubernetes API Ideas and the Shared Informer Sample.

In our case, we didn’t need to change the API contract and impose extra constraints and necessities on our customers. Doing so would require a considerable migration effort to maneuver all shoppers off the outdated API with questionable worth to the affected groups (apart from serving to us resolve Titus’ inner scalability issues). In our expertise, such migrations require a nontrivial quantity of labor, significantly with the migration timeline not totally in our management.

To meet the prevailing API contract, we needed to assure that for a request obtained at a time T₀, the info returned to the consumer is learn from a cache that incorporates all state updates in Titus Job Coordinator as much as time T₀.

The trail over which information travels from Titus Job Coordinator to a Titus Gateway cache will be described as a sequence of occasion queues with totally different processing speeds:

A message generated by the occasion supply could also be buffered at any stage. Moreover, as every occasion stream subscription from Titus Gateway to Titus Job Coordinator establishes a unique occasion of the processing pipeline, the state of the cache in every gateway occasion could also be vastly totally different.

Let’s assume a sequence of occasions E₁…E₁₀, and their location throughout the pipeline of two Titus Gateway situations at time T₁:

If a consumer makes a name to Titus Gateway 2 on the time T₁, it’ll learn model E₈ of the info. If it instantly makes a request to Titus Gateway 1, the cache there may be behind with respect to the opposite gateway so the consumer would possibly learn an older model of the info.

In each circumstances, information shouldn’t be updated within the caches. If a consumer created a brand new object at time T₀, and the item worth is captured by an occasion replace E₁₀, this object might be lacking in each gateways at time T₁. A shock to the consumer who efficiently accomplished a create request, however the follow-up question returned a not-found error (read-your-write consistency violation).

The answer is to flush all of the occasions created as much as time T₁ and drive shoppers to attend for the cache to obtain all of them. This work will be break up into two totally different steps every with its personal distinctive answer.

We solved the cache synchronization downside (as acknowledged above) with a mix of two methods:

- Titus Gateway <-> Titus Job Coordinator synchronization protocol over the wire.

- Utilization of high-resolution monotonic time sources like Java’s nano time inside a single server course of. Java’s nano time is used as a logical time inside a JVM to outline an order for occasions taking place within the JVM course of. Another answer primarily based on an atomic integer values generator to order the occasions would suffice as properly. Having the native logical time supply avoids points with distributed clock synchronization.

If Titus Gateways subscribed to the Titus Job Coordinator occasion stream with out synchronization steps, the quantity of information staleness can be inconceivable to estimate. To ensure {that a} Titus Gateway obtained all state updates that occurred till a while Tₙ an specific synchronization between the 2 providers should occur. Here’s what the protocol we applied appears to be like like:

- Titus Gateway receives a consumer request (queryₐ).

- Titus Gateway makes a request to the native cache to fetch the newest model of the info.

- The native cache in Titus Gateway data the native logical time and sends it to Titus Job Coordinator in a keep-alive message (keep-aliveₐ).

- Titus Job Coordinator saves the keep-alive request along with the native logical time Tₐ of the request arrival in an area queue (KAₐ, Tₐ).

- Titus Job Coordinator sends state updates to Titus Gateway till the previous observes a state replace (occasion) with a timestamp previous the recorded native logical time (E1, E2).

- At the moment, Titus Job Coordinator sends an acknowledgment occasion for the keep-alive message (KAₐ keep-alive ACK).

- Titus Gateway receives the keep-alive acknowledgment and consequently is aware of that its native cache incorporates all state modifications that occurred as much as the time when the keep-alive request was despatched.

- At this level the unique consumer request will be dealt with from the native cache, guaranteeing that the consumer will get a contemporary sufficient model of the info (responseₐ).

This course of is illustrated by the determine under:

The process above explains the best way to synchronize a Titus Gateway cache with the supply of reality in Titus Job Coordinator, however it doesn’t handle how the inner queues in Titus Job Coordinator are drained to the purpose the place all related messages are processed. The answer right here is so as to add a logical timestamp to every occasion and assure a minimal time interval between messages emitted contained in the occasion stream. If not sufficient occasions are created due to information updates, a dummy message is generated and inserted into the stream. Dummy messages assure that every keep-alive request is acknowledged inside a bounded time, and doesn’t wait indefinitely till some change within the system occurs. For instance:

Ta, Tb, Tc, Td, and Te are high-resolution monotonic logical timestamps. At time Td a dummy message is inserted, so the interval between two consecutive occasions within the occasion stream is at all times under a configurable threshold. These timestamp values are in contrast with keep-alive request arrival timestamps to know when a keep-alive acknowledgment will be despatched.

There are a number of optimization strategies that can be utilized. Listed here are these applied in Titus:

- Earlier than sending a keep-alive request for every new consumer request, wait a set interval and ship a single keep-alive request for all requests that arrived throughout that point. So the utmost charge of keep-alive requests is constrained by 1 / max_interval. For instance, if max_interval is about to 5ms, the max hold alive request charge is 200 req / sec.

- Collapse a number of keep-alive requests in Titus Job Coordinator, sending a response to the newest one which has the arrival timestamp lower than that of the timestamp of the final occasion despatched over the community. On the Titus Gateway aspect, a keep-alive response with a given timestamp acknowledges all pending requests with keep-alive timestamps earlier or equal to the obtained one.

- Don’t look ahead to cache synchronization on requests that should not have ordering necessities, serving information from the native cache on every Titus Gateway. Shoppers that may tolerate eventual consistency can choose into this new API for decrease response instances and elevated availability.

Given the mechanism described to date, let’s attempt to estimate the utmost wait time of a consumer request that arrived at Titus Gateway for various eventualities. Let’s assume that the utmost hold alive interval is 5ms, and the utmost interval between occasions emitted in Titus Job Coordinator is 2ms.

Assuming that the system runs idle (no modifications made to the info), and the consumer request arrives at a time when a brand new keep-alive request wait time begins, the cache replace latency is the same as 7 milliseconds + community propagation delay + processing time. If we ignore the processing time and assume that the community propagation delay is <1ms given we’ve got to solely ship again a small keep-alive response, we should always anticipate an 8ms delay within the typical case. If the consumer request doesn’t have to attend for the keep-alive to be despatched, and the keep-alive request is acknowledged instantly in Titus Job Coordinator, the delay is the same as community propagation delay + processing time, which we estimated to be <1ms. The common delay launched by cache synchronization is round 4ms.

Community propagation delays and stream processing instances begin to turn out to be a extra essential issue because the variety of state change occasions and consumer requests will increase. Nevertheless, Titus Job Coordinator can now dedicate its capability for serving excessive bandwidth streams to a finite variety of Titus Gateways, counting on the gateway situations to serve consumer requests, as an alternative of serving payloads to all consumer requests itself. Titus Gateways can then be scaled out to match consumer request volumes.

We ran empirical assessments for eventualities of high and low request volumes, and the outcomes are offered within the subsequent part.

To point out how the system performs with and with out the caching mechanism, we ran two assessments:

- A check with a low/reasonable load exhibiting a median latency enhance on account of overhead from the cache synchronization mechanism, however higher 99th percentile latencies.

- A check with load near the height of Titus Job Coordinator capability, above which the unique system collapses. Earlier outcomes maintain, exhibiting higher scalability with the caching answer.

A single request within the assessments under consists of 1 question. The question is of a reasonable measurement, which is a group of 100 data, with a serialized response measurement of ~256KB. The entire payload (request measurement instances the variety of concurrently operating requests) requires a community bandwidth of ~2Gbps within the first check and ~8Gbps in the second.

Average load stage

This check reveals the affect of cache synchronization on question latency in a reasonably loaded system. The question charge on this check is about to 1K requests/second.

Median latency with out caching is half of what we observe with the introduction of the caching mechanism, as a result of added synchronization delays. In change, the worst-case 99th percentile latencies are 90% decrease, dropping from 292 milliseconds with no cache to 30 milliseconds with the cache.

Load stage near Titus Job Coordinator most

If Titus Job Coordinator has to deal with all question requests (when the cache shouldn’t be enabled), it handles the site visitors properly as much as 4K check queries / second, and breaks down (sharp latency enhance and a speedy drop of throughput) at round 4.5K queries/sec. The utmost load check is thus stored at 4K queries/second.

With out caching enabled the 99th percentile hovers round 1000ms, and the eightieth percentile is round 336ms, in contrast with the cache-enabled 99th percentile at 46ms and eightieth percentile at 22ms. The median nonetheless appears to be like higher on the setup with no cache at 17ms vs 19ms when the cache is enabled. It must be famous nevertheless that the system with caching enabled scales out linearly to extra request load whereas holding the identical latency percentiles, whereas the no-cache setup collapses with a mere ~15% extra load enhance.

Doubling the load when the caching is enabled doesn’t enhance the latencies in any respect. Listed here are latency percentiles when operating 8K question requests/second:

After reaching the restrict of vertical scaling of our earlier system, we have been happy to implement an actual answer that gives (in a sensible sense) limitless scalability of Titus read-only API. We have been capable of obtain higher tail latencies with a minor sacrifice in median latencies when site visitors is low, and gained the flexibility to horizontally scale out our API gateway processing layer to deal with progress in site visitors with out modifications to API shoppers. The improve course of was fully clear, and no single consumer noticed any abnormalities or modifications in API habits throughout and after the migration.

The mechanism described right here will be utilized to any system counting on a singleton chief elected part because the supply of reality for managed information, the place the info matches in reminiscence and latency is low.

As for prior artwork, there may be ample protection of cache coherence protocols within the literature, each within the context of multiprocessor architectures (Adve & Gharachorloo, 1996) and distributed techniques (Gwertzman & Seltzer, 1996). Our work matches inside mechanisms of consumer polling and invalidation protocols explored by Gwertzman and Seltzer (1996) of their survey paper. Central timestamping to facilitate linearizability in learn replicas is just like the Calvin system (instance real-world implementations in techniques like FoundationDB) in addition to the reproduction watermarking in AWS Aurora.