By Jennifer Shin, Tejas Shikhare, Will Emmanuel

In 2022, a significant change was made to Netflix’s iOS and Android purposes. We migrated Netflix’s cellular apps to GraphQL with zero downtime, which concerned a complete overhaul from the consumer to the API layer.

Till lately, an inside API framework, Falcor, powered our cellular apps. They’re now backed by Federated GraphQL, a distributed strategy to APIs the place area groups can independently handle and personal particular sections of the API.

Doing this safely for 100s of tens of millions of shoppers with out disruption is exceptionally difficult, particularly contemplating the numerous dimensions of change concerned. This weblog publish will share broadly-applicable strategies (past GraphQL) we used to carry out this migration. The three methods we’ll focus on as we speak are AB Testing, Replay Testing, and Sticky Canaries.

Earlier than diving into these strategies, let’s briefly study the migration plan.

Earlier than GraphQL: Monolithic Falcor API applied and maintained by the API Crew

Earlier than transferring to GraphQL, our API layer consisted of a monolithic server constructed with Falcor. A single API group maintained each the Java implementation of the Falcor framework and the API Server.

Created a GraphQL Shim Service on high of our current Monolith Falcor API.

By the summer time of 2020, many UI engineers had been prepared to maneuver to GraphQL. As an alternative of embarking on a full-fledged migration high to backside, we created a GraphQL shim on high of our current Falcor API. The GraphQL shim enabled consumer engineers to maneuver shortly onto GraphQL, work out client-side issues like cache normalization, experiment with totally different GraphQL purchasers, and examine consumer efficiency with out being blocked by server-side migrations. To launch Section 1 safely, we used AB Testing.

Deprecate the GraphQL Shim Service and Legacy API Monolith in favor of GraphQL providers owned by the area groups.

We didn’t need the legacy Falcor API to linger endlessly, so we leaned into Federated GraphQL to energy a single GraphQL API with a number of GraphQL servers.

We might additionally swap out the implementation of a discipline from GraphQL Shim to Video API with federation directives. To launch Section 2 safely, we used Replay Testing and Sticky Canaries.

Two key elements decided our testing methods:

- Useful vs. non-functional necessities

- Idempotency

If we had been testing purposeful necessities like information accuracy, and if the request was idempotent, we relied on Replay Testing. We knew we might check the identical question with the identical inputs and persistently count on the identical outcomes.

We couldn’t replay check GraphQL queries or mutations that requested non-idempotent fields.

And we positively couldn’t replay check non-functional necessities like caching and logging person interplay. In such instances, we weren’t testing for response information however general conduct. So, we relied on higher-level metrics-based testing: AB Testing and Sticky Canaries.

Let’s focus on the three testing methods in additional element.

Netflix historically makes use of AB Testing to guage whether or not new product options resonate with prospects. In Section 1, we leveraged the AB testing framework to isolate a person phase into two teams totaling 1 million customers. The management group’s visitors utilized the legacy Falcor stack, whereas the experiment inhabitants leveraged the brand new GraphQL consumer and was directed to the GraphQL Shim. To find out buyer impression, we might examine numerous metrics reminiscent of error charges, latencies, and time to render.

We arrange a client-side AB experiment that examined Falcor versus GraphQL and reported coarse-grained high quality of expertise metrics (QoE). The AB experiment outcomes hinted that GraphQL’s correctness was less than par with the legacy system. We spent the subsequent few months diving into these high-level metrics and fixing points reminiscent of cache TTLs, flawed consumer assumptions, and so forth.

Wins

Excessive-Stage Well being Metrics: AB Testing offered the reassurance we wanted in our general client-side GraphQL implementation. This helped us efficiently migrate 100% of the visitors on the cellular homepage canvas to GraphQL in 6 months.

Gotchas

Error Prognosis: With an AB check, we might see coarse-grained metrics which pointed to potential points, nevertheless it was difficult to diagnose the precise points.

The following part within the migration was to reimplement our current Falcor API in a GraphQL-first server (Video API Service). The Falcor API had change into a logic-heavy monolith with over a decade of tech debt. So we had to make sure that the reimplemented Video API server was bug-free and an identical to the already productized Shim service.

We developed a Replay Testing software to confirm that idempotent APIs had been migrated accurately from the GraphQL Shim to the Video API service.

The Replay Testing framework leverages the @override directive obtainable in GraphQL Federation. This directive tells the GraphQL Gateway to route to at least one GraphQL server over one other. Take, as an illustration, the next two GraphQL schemas outlined by the Shim Service and the Video Service:

The GraphQL Shim first outlined the certificationRating discipline (issues like Rated R or PG-13) in Section 1. In Section 2, we stood up the VideoService and outlined the identical certificationRating discipline marked with the @override directive. The presence of the an identical discipline with the @override directive knowledgeable the GraphQL Gateway to route the decision of this discipline to the brand new Video Service moderately than the previous Shim Service.

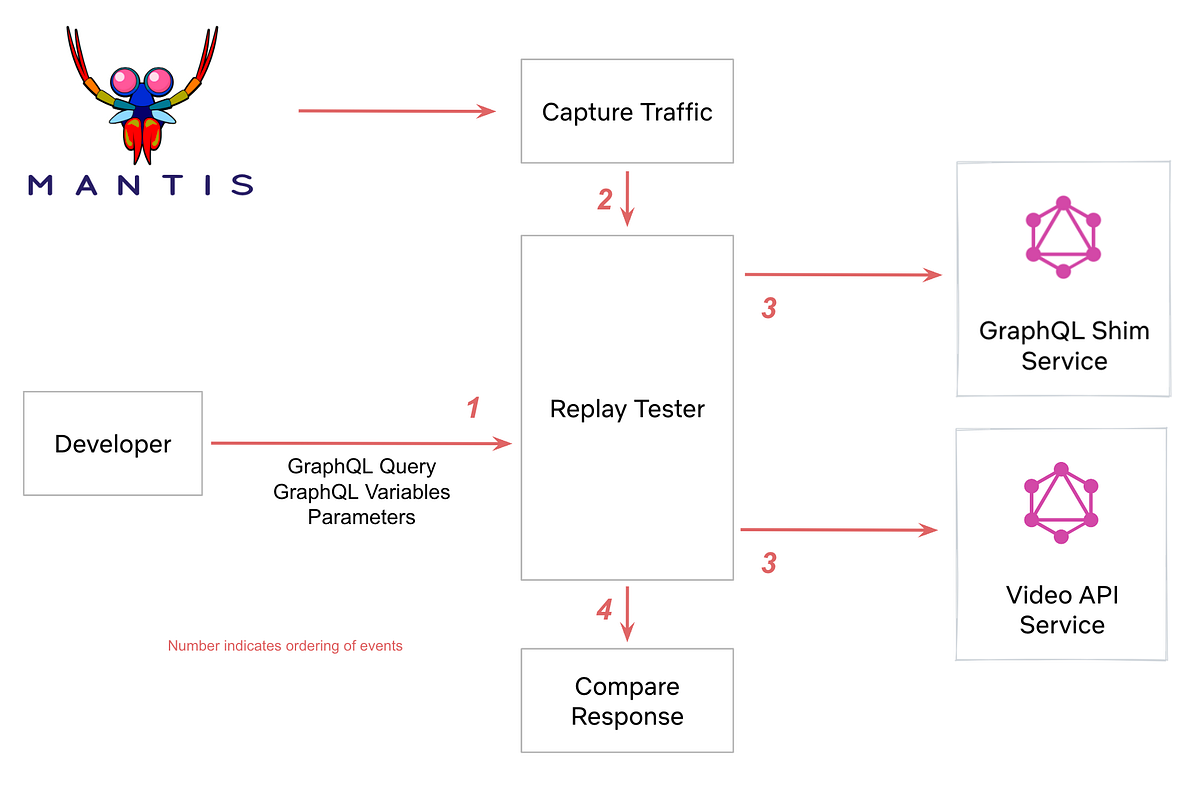

The Replay Tester software samples uncooked visitors streams from Mantis. With these sampled occasions, the software can seize a reside request from manufacturing and run an an identical GraphQL question towards each the GraphQL Shim and the brand new Video API service. The software then compares the outcomes and outputs any variations in response payloads.

Notice: We don’t replay check Personally Identifiable Data. It’s used just for non-sensitive product options on the Netflix UI.

As soon as the check is accomplished, the engineer can view the diffs displayed as a flattened JSON node. You possibly can see the management worth on the left facet of the comma in parentheses and the experiment worth on the suitable.

/information/movies/0/tags/3/id: (81496962, null)

/information/movies/0/tags/5/displayName: (Série, worth: “S303251rie”)

We captured two diffs above, the primary had lacking information for an ID discipline within the experiment, and the second had an encoding distinction. We additionally noticed variations in localization, date precisions, and floating level accuracy. It gave us confidence in replicated enterprise logic, the place subscriber plans and person geographic location decided the client’s catalog availability.

Wins

- Confidence in parity between the 2 GraphQL Implementations

- Enabled tuning configs in instances the place information was lacking on account of over-eager timeouts

- Examined enterprise logic that required many (unknown) inputs and the place correctness might be onerous to eyeball

Gotchas

- PII and non-idempotent APIs ought to not be examined utilizing Replay Exams, and it will be invaluable to have a mechanism to forestall that.

- Manually constructed queries are solely pretty much as good because the options the developer remembers to check. We ended up with untested fields just because we forgot about them.

- Correctness: The thought of correctness might be complicated too. For instance, is it extra appropriate for an array to be empty or null, or is it simply noise? Finally, we matched the present conduct as a lot as doable as a result of verifying the robustness of the consumer’s error dealing with was troublesome.

Regardless of these shortcomings, Replay Testing was a key indicator that we had achieved purposeful correctness of most idempotent queries.

Whereas Replay Testing validates the purposeful correctness of the brand new GraphQL APIs, it doesn’t present any efficiency or enterprise metric perception, such because the general perceived well being of person interplay. Are customers clicking play on the identical charges? Are issues loading in time earlier than the person loses curiosity? Replay Testing additionally can’t be used for non-idempotent API validation. We reached for a Netflix software referred to as the Sticky Canary to construct confidence.

A Sticky Canary is an infrastructure experiment the place prospects are assigned both to a canary or baseline host for your complete period of an experiment. All incoming visitors is allotted to an experimental or baseline host primarily based on their gadget and profile, much like a bucket hash. The experimental host deployment serves all the shoppers assigned to the experiment. Watch our Chaos Engineering discuss from AWS Reinvent to study extra about Sticky Canaries.

Within the case of our GraphQL APIs, we used a Sticky Canary experiment to run two cases of our GraphQL gateway. The baseline gateway used the present schema, which routes all visitors to the GraphQL Shim. The experimental gateway used the brand new proposed schema, which routes visitors to the most recent Video API service. Zuul, our main edge gateway, assigns visitors to both cluster primarily based on the experiment parameters.

We then accumulate and analyze the efficiency of the 2 clusters. Some KPIs we monitor intently embrace:

- Median and tail latencies

- Error charges

- Logs

- Useful resource utilization–CPU, community visitors, reminiscence, disk

- Machine QoE (High quality of Expertise) metrics

- Streaming well being metrics

We began small, with tiny buyer allocations for hour-long experiments. After validating efficiency, we slowly constructed up scope. We elevated the proportion of buyer allocations, launched multi-region checks, and ultimately 12-hour or day-long experiments. Validating alongside the way in which is crucial since Sticky Canaries impression reside manufacturing visitors and are assigned persistently to a buyer.

After a number of sticky canary experiments, we had assurance that part 2 of the migration improved all core metrics, and we might dial up GraphQL globally with confidence.

Wins

Sticky Canaries was important to construct confidence in our new GraphQL providers.

- Non-Idempotent APIs: these checks are suitable with mutating or non-idempotent APIs

- Enterprise metrics: Sticky Canaries validated our core Netflix enterprise metrics had improved after the migration

- System efficiency: Insights into latency and useful resource utilization assist us perceive how scaling profiles change after migration

Gotchas

- Adverse Buyer Impression: Sticky Canaries can impression actual customers. We would have liked confidence in our new providers earlier than persistently routing some prospects to them. That is partially mitigated by real-time impression detection, which can mechanically cancel experiments.

- Brief-lived: Sticky Canaries are meant for short-lived experiments. For longer-lived checks, a full-blown AB check must be used.

Know-how is consistently altering, and we, as engineers, spend a big a part of our careers performing migrations. The query just isn’t whether or not we’re migrating however whether or not we’re migrating safely, with zero downtime, in a well timed method.

At Netflix, we now have developed instruments that guarantee confidence in these migrations, focused towards every particular use case being examined. We coated three instruments, AB testing, Replay Testing, and Sticky Canaries that we used for the GraphQL Migration.

This weblog publish is a part of our Migrating Vital Site visitors collection. Additionally, try: Migrating Vital Site visitors at Scale (half 1, half 2) and Guaranteeing the Profitable Launch of Adverts.